Today we release a big step in improving Collabora Online installability for home users. Collabora has typically focused on supporting our enterprise users who pay the bills: most of whom are familiar with getting certificates, configuring web server proxies, port numbers, and so on (with our help). The problem is that this has left home-users, eager to take advantage of our privacy and ease of use, with a large barrier to entry. We set about adding easy-to-setup Demo Servers for users - but of course, people want to use their own hardware and not let their documents out of their site. So - today we've released a new way to do that - using a new PHP proxying protocol and app-image bundled into a single-click installable Nextcloud app (we will be bringing this to other PHP solutions soon too). This is a quick write-up of how this works.

How things used to work in an ideal world

Ideally a home user grabs CODE as a docker image, or installs the packages on their public facing https:// webserver. Then they create their certificates for those hosts, configure their use in loolwsd.xml and/or setup an Apache or NginX reverse proxy so that the SSL unwrap & certificate magic can be done by proxying through the web-server.

This means that we end up with a nice C++ browser, talking directly

through a C kernel across the network to another C++ app: loolwsd

the web-services daemon. Sure we have an SSL transport and a websocket layered

over that, and perhaps we have an intermediate proxying/unwrapping webserver,

but life is reasonably sensible. We have a daemon that loads and persists all

documents in memory and manages and locks-down access to them. It manages low

latency, high performance, bi-directional transport - over a persistent,

secure connection to our browsers. We even re-worked our main-loops to use

microsecond waits to avoid some silly one millisecond wasteage here and there,

here is how it looks:

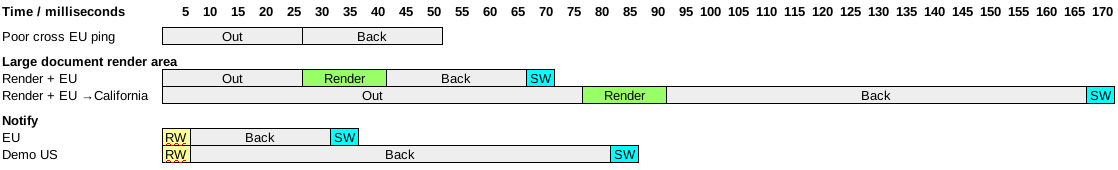

This shows a particularly poor cross-European ping (Finland to Spain) and a particularly heavy document re-rendering, as well as some S/W time to render the result in the browser. This works reasonably nicely for editing on a server in San Francisco from the EU. Push notifications are fast too.

The problem is - this world seems hard for casual users to configure.

A websocket replacement: trickier with PHP & JS

Ideally we could setup the web server to proxy our requests nicely from the get-go, and of course Collabora Online is inevitably integrated with an already-working PHP app of some sort. So - why not re-use all of that already working PHP-goodness ?

PHP of course, has no built-in support for web-sockets for this use-case, furthermore it is expected that PHP processes are short-lived and are spawned quickly by the server - being killed after a few (say 15) seconds by default for bad behavior if they are still around.

From the Javascript side too - we don't appear to have any API we can

use to send low-latency messages across the web except the venerable XMLHttpRequest

API that launched a spasm of AJAX

and 'Web 2.0' a couple of decades ago. So - we can make asynchronous requests,

but we badly need very low latency to get interactive editing to work - so how

is that going to pan out ?

Clearly we want to use https or TLS - but negotiating a secure connection requires a handshake that is rich in round-trip latency: exactly what we don't want.

Mercifully - web browser & server types got together a long time ago to do two things: create pools of multiple connections to web servers to get faster page loads, and also to use persistent connections (keep-alive) to get many requests down the same negotiated TLS connection.

So it turns out that in fact we can avoid the TLS overhead, and also make

several parallel requests without a huge latency penalty, despite the

XMLHttpRequest API: excellent - for client connections we loose

little latency, so we can stack our own binary and text, web-socket-like

protocol on top of this.

Running our daemon

Clearly we want to avoid our document daemon being regularly killed every

few seconds, but luckily by disowning the spawned processes this is reasonably

trivial. When we fail to connect, we can launch an app-image that can be bundled

inside our PHP app close to hand, and then get it to serve the request we want.

Initially it was thought that this should be as simple as reading

the php://input and writing it to a socket connected to loolwsd

and then reading the output back and writing it to php://output.

Unfortunately things are never quite that easy:

PHP as a proxy: the problems

If you love PHP, probably you want to read the Proxy code - three hundred lines, most of which should not be necessary.

The most significant problem is that we need to get data through

Apache, into a spawned PHP process and send it on via a socket quickly.

Many default setups love to parse, and re-parse the PHP for each request,

so - when the first attempt to integrate the prototype with Nextcloud's

PHP infrastructure (allowing every app to have it's say) and so on - we

went from ~3ms to ~110ms of overhead per request. Not ideal.

Thankfully with some help, and a tweak to the NginX config- we can use

our standalone proxy.php as above; nice.

Then it is necessary to get the HTML request, its length, and

content re-constructed from what PHP gives you. This means iterating

over the parsed versions of headers that PHP has carefully parsed, to

carefully serialize them again. Then - trying to get the request body.

php://input sounds like the ideal stream to use at this

point, except that (un-mentioned in the documentation) for rfc1867

post handling it is an empty stream, and it is necessary to re-construct

the mime-like sub-elements in PHP.

After all this - we then get a beautiful clean blob back from

loolwsd containing all the headers & content we want to proxy back.

Unfortunately, PHP is not going to let you open php://output

and write that across - so, we have to parse the request, and manually

populate the headers before dropping the body in. Too helpful by half.

After doing all of this we are ~3ms older per request or more.

It would be really lovely to have a php://rawinput and

a php://rawoutput that provided the sharp tool we could

use to shoot ourselves in the foot more quickly.

Polling vs. long-polling

Our initial implementation was concerned about reply latency - how can we get a reply back from the server as soon as it is available ? we implemented an elegant scheme to have a rotating series of four 'wait' requests which we turned over every few seconds before the web-server closed them. When a message from loolwsd needed sending we could immediately return it without waiting for an incoming message. Beautiful!

This scheme fell foul of the fact that each PHP process you have sitting there waiting, consumes a non-renewable resource - my webserver defaults to only ~10 processes; so this limited collaboration to around two users without gumming the machine up: horrible. Clearly it is vital to keep PHP transaction time low, so we need to get in and out very fast, all those nice ideas about waiting a little while for responses to be prepared before returning a message sound great but consume this limited resource of concurrent PHP transactions.

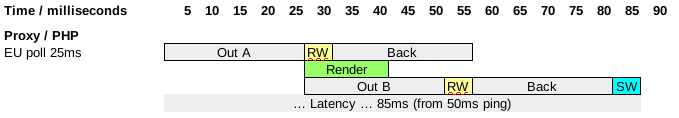

So - bad news it is necessary to do rabid polling to sustain multiple concurrent users. That is rather unfortunate. This picture shows how needing to get out of Apache/PHP fast gives us an extra polling period of latency:

The solution

We have to ping the server every ~25ms or so (this is around half our target in-continent latency). Luckily we can back-off from doing this too regularly as we notice that when we poll nothing is going or coming back. Currently we exponentially reduce our polling frequency from 25ms to every 500ms when we notice nothing is happening on the wire. That means that another collaborator might have typed something and we might only catch-up 500ms later, but of course anything we type will reset that, and show up very rapidly:

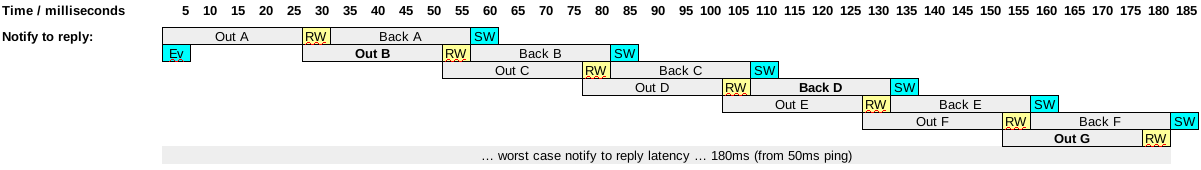

Of course, the worst case where the server needs to get information to the client to respond (and it goes without saying that our protocol is asynchronous everywhere) - is around an inter-continental latency: event 'Ev' misses the train, and is sent with B, the response missing the train 'C' and returning in Back 'D' the the browser reply missing Out 'F' and the data getting back in Out 'G':

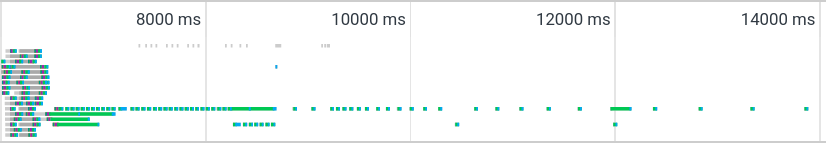

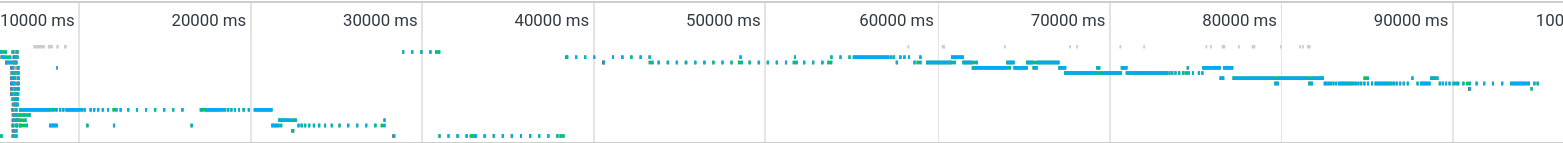

So much for design spreadsheets - what does it look like in practice ? there is a flurry of connections at startup, and then a clear exponential back-off - we continue to tune this:

As we get into the editing session you can see that connections are re-used, and new ones are created as we go along:

Limitations & Caveats

Perhaps an interesting approach, this comes with a few caveats:

-

Security - while the app-image is signed & verified,

and built from our stock packages - it is no longer run with

the capabilities necessary to isolate each document into its

own chroot jail. Isolation is thereby diminished. We also

disable this mode by default in normal installs; checkout the

proxy_prefixsetting inloolwsd.xmlif you want something similar. - AMD64 - for now we provide only x86 / 64bit AppImages.

- Latency - as discussed extensively above latency will be slightly higher: this is not recommended for inter-continental usage. Of course - just following the ideal instructions will give you much better performance when you have it working.

- Bandwidth - The individual HTTP requests each with many headers have a significant overhead and are much more verbose than the minimal binary websocket protocol.

- Scalability - this should work really nicely for home users with a few people collaborating. If you tweak your PHP FPM or enable OpCache you can make everything quicker for yourself not just proxying. For larger number of users you'll want a properly managed and configured Collabora Online - perhaps with an HA cluster, and/or GlobalScale.

All in all, once you have things running, it is a good idea to spend a little extra time installing a docker image / packages and configuring your web-server to do proper proxying of web-sockets.

Thanks & what's next

Many thanks to Collaborans: Kendy, Ash, Muhammet, Mert & Andras, as well as Julius from Nextcloud - who took my poor quaity prototype & turned it into a product. Anyone can have a bad idea - it takes real skill and dedication to make it work beautifully; thank you. Of course, thanks too to the LibreOffice community for their suggestions & feedback. Next: we continue to work on tuning and improving latency hiding for example grouping together the large number of SVG icon images that we need to load and cache in the browser on first-load - which will be generally useful to all users. And of course we love to be able to continue investing in better integration with our partner's rich solutions.